If you want to learn how to add custom robots.txt file in blogger, then YES, congratulation, you enter the correct article. This is the article for you. In this post, I’m sharing how to add custom robots.txt file in Blogger Blogspot. Before we jump in, I would like to talk about what is robot.txt file is and why you should add custom robots.txt file in your blogger blog.

In Blogger search option is related to Labels. If you are not using labels wisely per post, you should disallow the crawl of the search link. In Blogger, by default, the search link is disallowed to crawl. In this robots.txt, you can also write the location of your sitemap file. A sitemap is a file located on a server that contains all posts’ permalinks of your website or blog. Mostly sitemap is found in XML format, i.e., sitemap.xml.

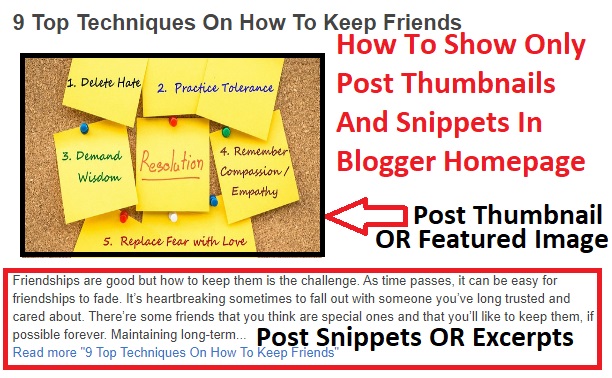

Other Tutorials:

How To Set Custom Header Tags For Blogger Blog Step By Step - 4 Steps

What Is Custom Robots.Txt File? Why Do I Need To Add Custom Robots.txt File In Blogger?

Robots.txt is a text file of Google that includes few lines of simple code. It is stored on the website or site’s server which guides the web crawlers on how to index and crawl your site in the search results of Google. That means you can restrict any web page on your blog from web crawlers so that it can’t get indexed in search engines like your blog labels page, your demo page or any other pages that are not as important to get indexed. Always remember that search crawlers scan the robots.txt file before crawling any web page.In Blogger search option is related to Labels. If you are not using labels wisely per post, you should disallow the crawl of the search link. In Blogger, by default, the search link is disallowed to crawl. In this robots.txt, you can also write the location of your sitemap file. A sitemap is a file located on a server that contains all posts’ permalinks of your website or blog. Mostly sitemap is found in XML format, i.e., sitemap.xml.

Other Tutorials:

How To Set Custom Header Tags For Blogger Blog Step By Step - 4 Steps

All About Custom Robots.txt File In Blogger Step By Step With Explanation To Key Words

Each Site hosted in blogger has its own default custom robots.txt file that’s something look like that:User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Disallow: /b

Allow: /

Sitemap: https://www.yourblogurll.blogspot.com/feeds/posts/default?orderby=updated

Let’s Understand Custom Robot.txt Totally: Explanation To Custom Robots.txt File In Blogger Key Words

This code above is divided into different segments. Let us first study each of them and later we will find out how to discover custom robots.txt files in blogger sites.User-agent: Mediapartners-Google

This code is for Google Adsense robots which help them to serve superior ads on your blog. Either you’re using Google Adsense on your blog or not simply abandon it as it really is.

User-agent:*

In default, our blog’s tags hyperlinks are limited to found by search crawlers that mean that the webmaster won’t index our tags page hyperlinks because of the below code.

Disallow: /search

That means the hyperlinks having keyword lookup only after the domain name will be ignored. See below example that’s a link of tag page called SEO.

And if we eliminate Disallow: /search in the above code then crawlers may get our entire blog to index and crawl all its articles and website pages. Here Allow: / describes the Homepage which means internet crawlers can crawl and index our blog’s homepage.

How To Disallow Particular Post: Now suppose if we want to exclude a specific article from indexing then we could put in below lines from the code. Disallow: /yyyy/mm/post-url.html Here This yyyy and mm denotes the publishing month and year of the post respectively. For instance, if we’ve published a post in the year 2020 per month of March then we have to use the following format. Disallow: /2020/03/post-url.html To make this task easy, it is possible to merely copy the post URL and eliminate the domain from the beginning.

How To Disallow Particular Page: If we need to disallow a particular page then we can use exactly the same method as previously. Simply copy the page URL and remove the site address from it that will look like this: Disallow: /p/page-url. html

Sitemap:http://yoururll.blogspot.com/feeds/posts/defaultorderby=UPDATED

This code denotes the site of the website. Should you put in the site of your site here then you’re able to maximize the speed of this blog. It means if the crawlers crawl your robots.txt file then they can find a route to crawl the site of your site also. Should you include your site sitemap in robots.txt document then net crawlers will easily crawl all of the pages and articles without missing one.

Let me explain further for better understanding, there are 3 types of Custom Robots.txt File

Robots.txt Type 1

User-agent: Mediapartners-Google Disallow: User-agent: * Disallow: /search Disallow: /b Allow: / Sitemap: https://www.yourblogurl.blogspot.com/sitemap.xml

Robots.txt Type 2

User-agent: Mediapartners-Google Disallow: User-agent: * Disallow: /search Disallow: /b Allow: / Sitemap: https://www.yourblogurl.blogspot.com/feeds/posts/default?orderby=updated

Note: Don’t forget to change the https://www.yourblogurl.blogspot.com with your blog address or a custom domain. Please see the implementation below - How To Add Custom Robots.txt File In Blogger Step By Step. If you want search engine bots to crawl the most recent 500 posts, then you should need to use following robots.txt type 2. If already you have more than 500 posts on your blog, then you can add one more sitemap line.

Note: This sitemap will only tell the web crawlers about the recent 25 posts. If you want to increase the number of links in your sitemap then replace the default sitemap with below one. It will work for the first 500 recent posts.

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

If you have more than 500 published posts in your blog then you can use two sitemaps like below:

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=500&max-results=1000

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

If you have more than 500 published posts in your blog then you can use two sitemaps like below:

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=500&max-results=1000

Robots.txt Type 3

User-agent: Mediapartners-Google Disallow: User-agent: * Disallow: /search Disallow: /b Allow: / Sitemap: https://www.yourblogurl.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500 Sitemap: https://www.yourblogurl.blogspot.com/atom.xml?redirect=false&start-index=500&max-results=1000

How To Add Custom Robots.txt File In Blogger Step By Step

Now the main part of this tutorial is how to add custom robots.txt in blogger. So below are steps to add it.

Step 1 – Go to your blogger blog.

Step 2 – Navigate to Settings Search Preferences ›› Crawlers and indexing ›› Custom robots.txt

Step 3 – Now enable the custom robots.txt content by selecting “Yes.”

Step 4 – Now paste your robots.txt file code in the box.

Step 5- Click on the Save Changes button.

Now You Are Done! See the infographic below for more information

Step 1 – Go to your blogger blog.

Step 2 – Navigate to Settings Search Preferences ›› Crawlers and indexing ›› Custom robots.txt

Step 3 – Now enable the custom robots.txt content by selecting “Yes.”

Step 4 – Now paste your robots.txt file code in the box.

Step 5- Click on the Save Changes button.

Now You Are Done! See the infographic below for more information

How to Check Your Robots.txt File

You can check this file on your blog by adding /robots.txt at the end of your blog URL in the web browser. For example:

http://www.yourblogurl.blogspot.com/robots.txt

Once you visit the robots.txt file URL you will see the entire code which you are using in your custom robots.txt file. See the infographic below:

http://www.yourblogurl.blogspot.com/robots.txt

Once you visit the robots.txt file URL you will see the entire code which you are using in your custom robots.txt file. See the infographic below:

28 Comments

What is the different between, 2 and 3

ReplyDeleteThey are the same, but the only difference is that Number 2 is for 500 post and number 3 is for more than 500 post

DeleteFree SEO Tools for Blogger

DeleteSingle Click Meta Tag Generator Tool for Blogger

Single Click robots.txt XML Sitemap Generator Tool for Blogger

Hi nice post, Useful but readers getting little confusion, I reading your every posts on this blog its very useful and informative.

ReplyDeleteI created a robots.txt single click generator. I published the XML Sitemap Generator Tool on my blog for free to use all Bloggers. I'm blogging in Tamil language. I'm not getting traffic to my blog. Could you make a blog post about my Single click robots.txt XML Sitemap Generator Tool. It will help to generate xml sitemap for every one without any confusion and I already made a "meta tag generator tool" for free to use. I drop my SEO tool link here for all bloggers. I hope you will publish a post about my seo tools. Please support me I'm a beginner tech blogger. I don't have enough English writing skills so I can't get global audience like you. Support me to get some traffic to this tool. I'm getting 10 to 22 hits of my total blog posts per day. The reason is Tamil blog readers count is very low. Thanks.

Free SEO Tools for Blogger

Single Click Meta Tag Generator Tool for Blogger

Meta Tag Generator Tool for Blogger

Single Click robots.txt XML Sitemap Generator Tool for Blogger

robots.txt XML Sitemap Generator Tool for Blogger

Friends Any One Need World's Cheapest Web Hosting for Your Blog, Website or Online Store?

ReplyDeleteGo to www.prohostor.com

SSD Web Hosting Starts @ $ 3.15 /year.

Business SSD Web Hosting Starts @ $ 2.05 /mo.

Unlimited SSD Web Hosting Starts @ $ 5.46 /mo.

Best Features:

Unlimited SSD disk space

Unlimited Bandwidth

Free SSL Certificate

cPanel + Softaculous app installer

Latest PHP version

Unlimited MySql database

Unlimited FTP accounts

Free Malware Protection

Free Weekly Backups

30day money back

24/7 Chat Support and more.

Prohostor Affiliate Program Details.

Earn up to $ 24.25 for every referral

Prohostor.Com

Follow on Pinterest & Twitter

https://in.pinterest.com/prohostor

https://twitter.com/prohostor

Great post shared. We are an expert Robot supplier in delhi. Our vision is to create global presence in power transmission by innovating and developing products (Servo planetary, Strainwave gearboxes, Six axis, Pic & place robots, AGV and engineering solutions) to enhance value and satisfaction of our customers. Robot supplier in delhi

ReplyDeleteI'm new here and i like your blog !

ReplyDeleteI enjoy reading coz i get a lot of information.

Can you please make a blog on how to fix my problem in monetizing my site on google adsense.

I am new blogger and i don't anything about coding, until now my site didn't accep for monitization,

here is the problem i always receive from google adsense,

"No content includes placeholder for site or apps under construction"

i didn't understand what it means, i have already 29post in my site.

Please help me to fix the problem please!!!

I'm new here and i like your blog !

ReplyDeleteI enjoy reading coz i get a lot of information.

Can you please make a blog on how to fix my problem in monetizing my site on google adsense.

I am new blogger and i don't anything about coding, until now my site didn't accep for monitization,

here is the problem i always receive from google adsense,

"No content includes placeholder for site or apps under construction"

i didn't understand what it means, i have already 29post in my site.

Please help me to fix the problem please!!!

Visit my blog BloggingForest

ReplyDeleteThanks, bro. It was a very helpful blog especially for new bloggers I appreciate your hard work well-done Bro.

ReplyDeleteYou have made this tutorial very easy to understand for your readers as a blogger I impressed from your writing skills and from your sound knowledge keep going and best of luck for your future posts

there is probably a little information is missing in your post that was crawl budget how you can manage them via robot.txt file? because managing your crawl budget with the help of robots.txt file may help better ranking in serp

Nice post

ReplyDeleteOverpowering dispatch! I'm all through hoping to over this information, is really friendly my mate. In like way mind blowing blog here among a wide piece of the cutoff data you get. Hold up the solid set forward endeavor you are doing here. https://vograce.com

ReplyDeleteOverwhelming dispatch! I'm clearly getting fitting to over this information, is truly friendly my amigo. Other than amazing site here among a main number of the cutoff data you secure. Hold up the titanic joint effort you are doing here. https://vograce.com

ReplyDeleteHi nice post, Useful but readers getting little confusion, I reading your every posts on this blog its very useful and informative.I drop my Blog link link here for all bloggers. I hope you will like my Blogging tutorial. Please support me I'm a beginner tech blogger. I don't have enough English writing skills so I can't get global audience like you. Support me to get some traffic.. I'm getting 200 to 950 hits of my total blog posts per day.Blogging tutorial for Blogger

ReplyDeleteMerely a smiling visitant here to share the love (:, btw outstanding style. visit here

ReplyDeletePretty good post. I just stumbled upon your blog and wanted to say that I have really enjoyed reading your blog posts. Any way I'll be subscribing to your feed and I hope you post again soon. Big thanks for the useful info. Inia visa i runga ipurangi

ReplyDeleteI admire this article for the well-researched content and excellent wording. I got so involved in this material that I couldn’t stop reading. I am impressed with your work and skill. Thank you so much. india vizo interrete

ReplyDeleteCAS functions well for lasting web content retention for conformity and/or regulative objectives scale out storage and also archiving substantial quantities of documents, pictures, or various other details that are most likely to continue to be fixed. It's additionally an exceptional device for fetching set web content. The major disadvantage to CAS is that individuals compromise exceptional knowledge and also details dependability for efficiency.

ReplyDeleteTest

ReplyDeleteI have read your article, it is very informative and helpful for me.I admire the valuable information you offer in your articles. Thanks for posting it. keep it up it's time to avail this Airport car services in Detroit for more details.

ReplyDeleteMore valuable post!!! Waiting for the further data regarding this post.

ReplyDeleteGoogle Analytics Training in Chennai

google analytics online course

Google Analytics Course in Chennai

thank you friends

ReplyDeletehttps://banglatechjagat.blogspot.com/2018/11/how-to-check-cookies-notification-in.html?showComment=1642663357287#c4764721541050008013

ReplyDeleteGreat Information Sir thanks for help

ReplyDeleteI really regard this specific post that you have obliged us. I guarantee this would be major for a goliath piece of individuals. LED robots

ReplyDeleteWhat a fantabulous post this has been. Never seen this kind of useful post. I am grateful to you and expect more number of posts like these. Thank you very much. !!!

ReplyDeleteReal Estate CRM

When you go to the website of the Sattaking Agency, you will see that many numbers (00-99) will be written, all you have to do is choose a lucky number and invest as much money as you can according to your budget. Satta king

ReplyDeleteWhat a wonderful post. I am very happy and i appreciate your effort. You are doing a great job. Thanks for sharing your knowledge.

ReplyDeleteJyotish with AkshayG

Jyotish with AkshayG