Hi, welcome to the Custom Robots.txt For Blogger Tutorial. Who does not want to appear on top of google and other search engines? Of course you want to do everything possible to make sure crawlers don't pass by your inventory. In this tutorial, we learn How To Create And Add Custom Robots.txt File In Blogger. Making good settings and create add Custom Robots.txt File in blogger will really help you in search rankings.

Before we jump in, I would like to talk about what is robot.txt file is and why you should add custom robots.txt file in your blogger blog.

What Is Custom Robots.txt File?

A robots.txt file lives at the root of your site. So, for site www.example.com, the robots.txt file lives at www.example.com/robots.txt. robots.txt is a plain text file that follows the Robots Exclusion Standard. A robots.txt file consists of one or more rules. Each rule blocks (or or allows) access for a given crawler to a specified file path in that website.

Here is a simple robots.txt file with two rules, explained below:

# Group 1

User-agent: Googlebot

Disallow: /nogooglebot/

# Group 2

User-agent: *

Allow: /

Sitemap: http://www.example.com/sitemap.xmlExplanation:

- The user agent named "Googlebot" crawler should not crawl the folder

http://example.com/nogooglebot/or any subdirectories. - All other user agents can access the entire site. (This could have been omitted and the result would be the same, as full access is the assumption.)

- The site's Sitemap file is located at http://www.example.com/sitemap.xml

Basic Robots.txt Guidelines

You can use almost any text editor to create a robots.txt file. The text editor should be able to create standard UTF-8 text files. Don't use a word processor; word processors often save files in a proprietary format and can add unexpected characters, such as curly quotes, which can cause problems for crawlers.

- Signs He Is Not the One for You[9 Easy To Spot Signs]

- Signs He Is the One You Should Marry[8 Easy To Spot Signs]

- Signs She Has a Boyfriend Already[4 Easy To Tell Signs]

Syntax

- robots.txt must be an UTF-8 encoded text file (which includes ASCII). Using other character sets is not possible.

- A robots.txt file consists of one or more group.

- Each group consists of multiple rules or directives (instructions), one directive per line.

- A group gives the following information:

- Who the group applies to (the user agent)

- Which directories or files that agent can access, and/or

- Which directories or files that agent cannot access.

- Groups are processed from top to bottom, and a user agent can match only one rule set, which is the first, most-specific rule that matches a given user agent.

- The default assumption is that a user agent can crawl any page or directory not blocked by a

Disallow:rule. - Rules are case-sensitive. For instance,

Disallow: /file.aspapplies tohttp://www.example.com/file.asp, but nothttp://www.example.com/FILE.asp.

The following directives are used in robots.txt files:

User-agent: [Required, one or more per group] The name of a search engine robot (web crawler software) that the rule applies to. This is the first line for any rule. Most Google user-agent names are listed in the Web Robots Database or in the Google list of user agents. Supports the * wildcard for a path prefix, suffix, or entire string. Using an asterisk (*) as in the example below will match all crawlers except the various AdsBot crawlers, which must be named explicitly. (See the list of Google crawler names.) Examples:

# Example 1: Block only Googlebot

User-agent: Googlebot

Disallow: /

# Example 2: Block Googlebot and Adsbot

User-agent: Googlebot

User-agent: AdsBot-Google

Disallow: /

# Example 3: Block all but AdsBot crawlers

User-agent: *

Disallow: /Disallow: [At least one or more Disallow or Allow entries per rule] A directory or page, relative to the root domain, that should not be crawled by the user agent. If a page, it should be the full page name as shown in the browser; if a directory, it should end in a / mark. Supports the * wildcard for a path prefix, suffix, or entire string.

Allow: [At least one or more Disallow or Allow entries per rule] A directory or page, relative to the root domain, that should be crawled by the user agent just mentioned. This is used to override Disallow to allow crawling of a subdirectory or page in a disallowed directory. If a page, it should be the full page name as shown in the browser; if a directory, it should end in a / mark. Supports the * wildcard for a path prefix, suffix, or entire string.

Sitemap: [Optional, zero or more per file] The location of a sitemap for this website. Must be a fully-qualified URL; Google doesn't assume or check http/https/www.non-www alternates. Sitemaps are a good way to indicate which content Google should crawl, as opposed to which content it can or cannot crawl. Learn more about sitemaps. Example:

Sitemap: https://example.com/sitemap.xml

Sitemap: http://www.example.com/sitemap.xmlUseful robots.txt rules

Here are some common useful robots.txt rules:

| Rule | Sample |

|---|---|

| Disallow crawling of the entire website. Keep in mind that in some situations URLs from the website may still be indexed, even if they haven't been crawled. Note: this does not match the various AdsBot crawlers, which must be named explicitly. | User-agent: * Disallow: / |

| Disallow crawling of a directory and its contents by following the directory name with a forward slash. Remember that you shouldn't use robots.txt to block access to private content: use proper authentication instead. URLs disallowed by the robots.txt file might still be indexed without being crawled, and the robots.txt file can be viewed by anyone, potentially disclosing the location of your private content. | User-agent: * Disallow: /calendar/ Disallow: /junk/ |

| Allow access to a single crawler | User-agent: Googlebot-news Allow: / User-agent: * Disallow: / |

| Allow access to all but a single crawler | User-agent: Unnecessarybot Disallow: / User-agent: * Allow: / |

| Disallow crawling of a single webpage by listing the page after the slash: | User-agent: * Disallow: /private_file.html |

| Block a specific image from Google Images: | User-agent: Googlebot-Image Disallow: /images/dogs.jpg |

| Block all images on your site from Google Images: | User-agent: Googlebot-Image Disallow: / |

Disallow crawling of files of a specific file type (for example, .gif): | User-agent: Googlebot Disallow: /*.gif$ |

| Disallow crawling of entire site, but show AdSense ads on those pages, disallow all web crawlers other than Mediapartners-Google. This implementation hides your pages from search results, but the Mediapartners-Google web crawler can still analyze them to decide what ads to show visitors to your site. | User-agent: * Disallow: / User-agent: Mediapartners-Google Allow: / |

Match URLs that end with a specific string, use $. For instance, the sample code blocks any URLs that end with .xls: | User-agent: Googlebot Disallow: /*.xls$ |

Custom Robots.txt File In Blogger

Each Site hosted in blogger has its own default custom robots.txt file that’s something look like that:

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Disallow: /b

Allow: /

Sitemap: https://www.yourblogurll.blogspot.com/feeds/posts/default?orderby=updated

Blogger Custom Robots.txt File Examples

Example 1

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://www.yourblogurl.blogspot.com/sitemap.xmlExample 2

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://www.yourblogurl.blogspot.com/feeds/posts/default?orderby=updatedExample 3

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://www.yourblogurl.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

Sitemap: https://www.yourblogurl.blogspot.com/atom.xml?redirect=false&start-index=500&max-results=1000Custom Robots.txt File In Blogger Key Words

Let’s Understand Custom Robot.txt Totally:

This code above is divided into different segments. Let us first study each of them and later we will find out how to discover custom robots.txt files in blogger sites.

User-agent: Mediapartners-Google

This code is for Google Adsense robots which help them to serve superior ads on your blog. Either you’re using Google Adsense on your blog or not simply abandon it as it really is.

User-agent:*

In default, our blog’s tags hyperlinks are limited to found by search crawlers that mean that the webmaster won’t index our tags page hyperlinks because of the below code.

Disallow: /search

That means the hyperlinks having keyword lookup only after the domain name will be ignored. See below example that’s a link of tag page called SEO.

And if we eliminate Disallow: /search in the above code then crawlers may get our entire blog to index and crawl all its articles and website pages. Here Allow: / describes the Homepage which means internet crawlers can crawl and index our blog’s homepage.

How To Disallow Particular Post: Now suppose if we want to exclude a specific article from indexing then we could put in below lines from the code. Disallow: /yyyy/mm/post-url.html Here This yyyy and mm denotes the publishing month and year of the post respectively. For instance, if we’ve published a post in the year 2020 per month of March then we have to use the following format. Disallow: /2020/03/post-url.html To make this task easy, it is possible to merely copy the post URL and eliminate the domain from the beginning.

How To Disallow Particular Page: If we need to disallow a particular page then we can use exactly the same method as previously. Simply copy the page URL and remove the site address from it that will look like this: Disallow: /p/page-url. html

Sitemap:http://yoururll.blogspot.com/feeds/posts/defaultorderby=UPDATED

This code denotes the site of the website. Should you put in the site of your site here then you’re able to maximize the speed of this blog. It means if the crawlers crawl your robots.txt file then they can find a route to crawl the site of your site also. Should you include your site sitemap in robots.txt document then net crawlers will easily crawl all of the pages and articles without missing one.

How To Custom Robots.txt File In Blogger

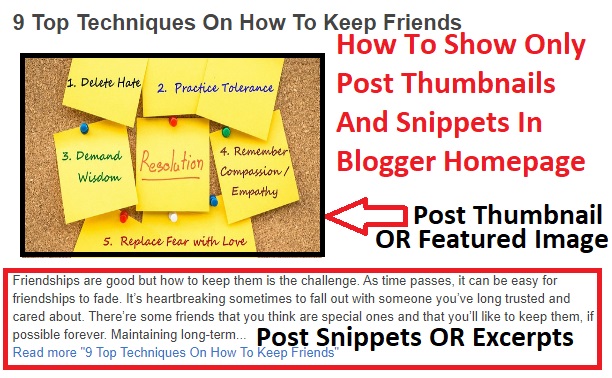

Step 1: Login to your blogger dashboard>>Settings. When you click on settings, scroll down the bar until you locate "Crawlers and indexing" right under Crawlers and indexing, you will see "Enable custom robots.txt". Turn on the button beside it so that you can add the custom robots.txt file to your blog. See the infographic below for more information.

Step 2: Just after the "Enable custom robots.txt" of which button you've turned on, you will see "custom robots.txt", go ahead and click on it. See the infographic below for more information.

Step 3: After you click on "custom robots.txt" a small box will pop up with a field. Copy the custom robots.txt file suitable for you below and paste inside the box. Don’t forget to change the https://yourblogurl.blogspot.com with your blog address or a custom domain. Save your file when you're done!

Robots.txt Type 1

Use this custom robots.txt file if you sitemap is an xml sitemap of which corresponds to the one submitted in google search webmaster tools or google search console. In other words, the sitemap you submitted in google search console should be the same that is included in the custom robots.txt file.

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://www.yourblogurl.blogspot.com/sitemap.xmlRobots.txt Type 2

Use this custom robots.txt file if your sitemap is an atom sitemap of which corresponds to the one submitted in google search webmaster tools or google search console. In other words, the sitemap you submitted in google search console should be the same that is included in the custom robots.txt file. And also use this if your post is not more than 500 posts.

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://www.yourblogurl.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

Robots.txt Type 3

Use this custom robots.txt file if your sitemap is an atom sitemap of which corresponds to the one submitted in google search webmaster tools or google search console. In other words, the sitemap you submitted in google search console should be the same that is included in the custom robots.txt file. And also use this if your post is more than 500 posts heading to thousand. If your post are more than 1000, you can use the same file by adding another sitemap line to it to extend beyond 1000 posts.

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://www.yourblogurl.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

Sitemap: https://www.yourblogurl.blogspot.com/atom.xml?redirect=false&start-index=500&max-results=1000

Don’t forget to change the https://yourblogurl.blogspot.com with your blog address or a custom domain. Save your file when you're done!

How to Check Custom Robots.txt File For Your Blog

You can check Custom Robots.txt File on your blog by adding /robots.txt at the end of your blog URL in the web browser. For example:

http://www.yourblogurl.blogspot.com/robots.txtOnce you visit the robots.txt file URL you will see the entire code which you are using in your custom robots.txt file. See the infographic below:

I tried to cover as much as I could about how to add custom robots.txt file in blogger or BlogSpot blog. By adding Custom Robots.txt File In Blogger it helps to increase your website’s organic traffic. And If you enjoyed this blog article, share it with your friends who wish to add custom robots.txt file in blogger and want to improve site traffic. If you have any doubts regarding this, feel free to ask in the below comment section!

22 Comments

Way cool! Some very valid points! I appreciate you penning this post and also

ReplyDeletethe rest of the site is also very good. asmr 0mniartist

I'd like to find out more? I'd love to find out some additional information. asmr 0mniartist

ReplyDeleteThis is a very good tip especially to those fresh to the blogosphere.

ReplyDeleteSimple but very accurate info… Thanks for sharing this one.

A must read post! 0mniartist asmr

This is very interesting, You're an excessively professional blogger.

ReplyDeleteI've joined your rss feed and look ahead to seeking more of your

wonderful post. Additionally, I've shared your website

in my social networks

When I initially commented I seem to have clicked the -Notify me when new comments are added- checkbox and now

ReplyDeletewhenever a comment is added I recieve 4 emails with the exact same comment.

Perhaps there is a means you are able to remove me from that service?

Kudos!

My programmer is trying to convince me to move to

ReplyDelete.net from PHP. I have always disliked the idea because

of the costs. But he's tryiong none the less. I've been using WordPress

on a variety of websites for about a year and am anxious about switching to another

platform. I have heard excellent things about blogengine.net.

Is there a way I can import all my wordpress posts into it?

Any help would be really appreciated!

Contact us: info@deworldinshights.com

ReplyDeleteYou have made some decent points there. I

ReplyDeletelooked on the net for additional information about the issue

and found most people will go along with your views on this website.

Unquestionably believe that which you stated. Your favorite reason seemed to be on the internet the easiest

ReplyDeletething to be aware of. I say to you, I definitely

get annoyed while people consider worries that they plainly don't know about.

You managed to hit the nail upon the top and defined out the whole thing without having side effect , people can take a signal.

Will likely be back to get more. Thanks

Excellent goods from you, man. I've be aware your stuff previous to and you are simply extremely magnificent.

ReplyDeleteI actually like what you have bought here, really

like what you're saying and the way in which you say it.

You are making it enjoyable and you continue to care for to keep it sensible.

I can't wait to learn far more from you. This is really a great web site.

Oh my goodness! Awesome article dude! Many thanks, However I am encountering

ReplyDeletetroubles with your RSS. I don't know why I am unable to subscribe to it.

Is there anyone else getting identical RSS problems?

Anyone who knows the solution can you kindly respond?

Thanks!!

If you want to get your blogger robot text file. You can learn from these post. On this moment, you can take the advantage from Commercial Pest Control in New York and get more services.

ReplyDeleteSadanilenews.blogspot.com

ReplyDeleteرائع جدا. https://sadanilenews.blogspot.com

ReplyDeletehttps://sadanilenews.blogspot.com/2021/12/28-xiaomi-12.html

ReplyDeleteIt's really a nice and useful piece of information. I am satisfied that you shared this helpful info with us. Please stay us up to date like this. Thanks for sharing for Apkdownload.com

ReplyDeleteThanks for sharing this useful Content. Hot Data is Top Blogging Agency, that accepts Guest Post. Hot Data

ReplyDeleteThank you for Sharing Great and useful information. Is anyone looking for the Robotics Certification Course Online & Offline. You can join ShapeMySkills. Visit: https://shapemyskills.in/courses/robotics-training/

ReplyDeleteRara Travel & Tour | Travel Jember

ReplyDeleteTips & Trik : Tips & Trik

Info : Travel Jember Malang

nice post. Buy Super P Force Online to treat ED.

ReplyDeleteThank you for writing such great blog. Armodafinil 150mg is used to promote wakefulness. armodafinil for sale

ReplyDeleteCenforce pills have emerged as a leading solution for individuals experiencing erectile dysfunction (ED), a condition that affects millions of men worldwide. Designed to restore sexual function and enhance intimacy, Cenforce pills have gained popularity as an effective treatment option. In this comprehensive guide, we will delve into the mechanism of action, recommended usage, potential side effects, and the transformative impact that Cenforce 100mg pills can have on treating ED and improving overall sexual well-being.

ReplyDelete